Developing internal world models for artificial agents opens an efficient channel for humans to communicate with and control them.

In addition to updating policies, humans can modify the world models of these agents in order to influence their decisions.

The challenge, however, is that currently existing world models are difficult for humans to adapt because they lack a natural communication interface.

Aimed at addressing this shortcoming, we develop Language-Guided World Models (LWMs), which can capture environment dynamics by reading language descriptions.

These models enhance agent communication efficiency, allowing humans to simultaneously alter their behavior on multiple tasks with concise language feedback.

They also enable agents to self-learn from texts originally written to instruct humans.

To facilitate the development of LWMs, we design a challenging benchmark based on the game of MESSENGER (Hanjie et al.,2021), requiring compositional generalization to new language descriptions and environment dynamics.

Our experiments reveal that the current state-of-the-art Transformer architecture performs poorly on this benchmark, motivating us to design a more robust architecture.

To showcase the practicality of our proposed LWMs, we simulate a scenario where these models augment the interpretability and safety of an agent by enabling it to generate and discuss plans with a human before execution.

By effectively incorporating language feedback on the plan, the models boost the agent performance in the real environment by up to three times without collecting any interactive experiences in this environment.

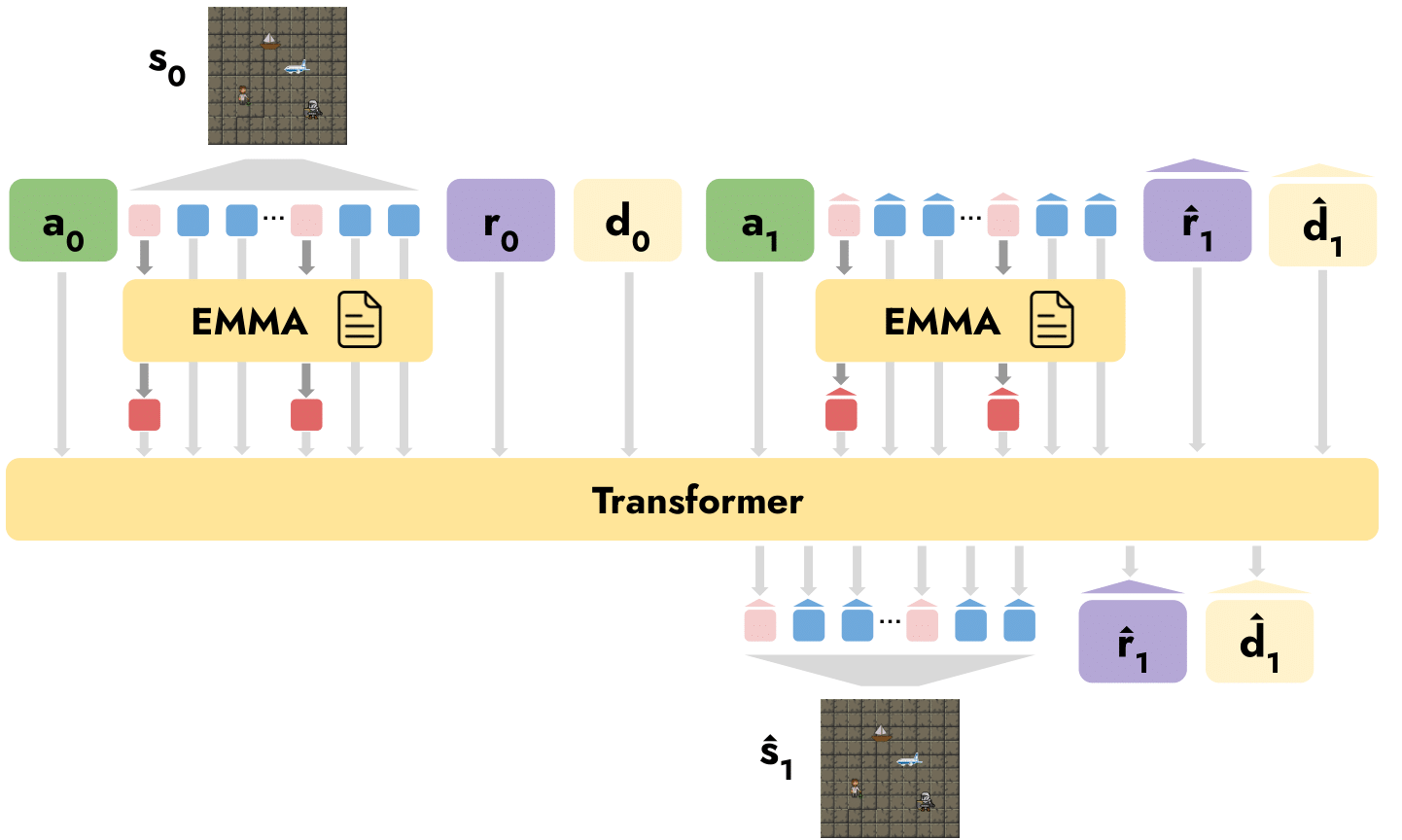

Learning LWMs poses a challenging problem involving the retrieval and incorporation of information expressed in different modalities. Our model is an encoder-decoder Transformer which encodes a manual and decodes a trajectory. We transform the trajectory into a long sequence of tokens and train the model as a sequence generator. We implement a specialized attention mechanism inspired by EMMA (Hanjie et al., 2021) to incorporate textual information into the observation tokens.

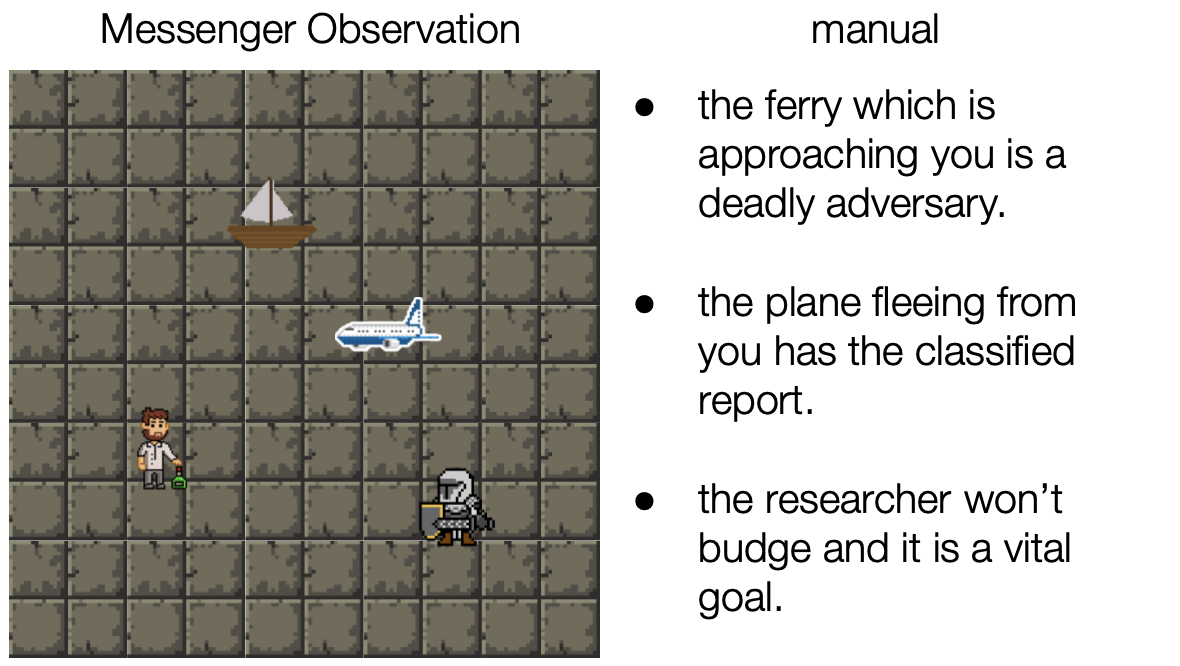

Our goal is to build world models that can generalize to compositionally novel texts and environment dynamics. We construct a challenging benchmark based on the MESSENGER environment to evaluate this capability of world models. In MESSENGER, a player manages to pick up a message entity and deliver to a goal entity without colliding with an enemy entity. There is a manual describing the identity and attributes of the entities. A model is tested on previously unseen environments that are increasingly dissimilar to the training environments.

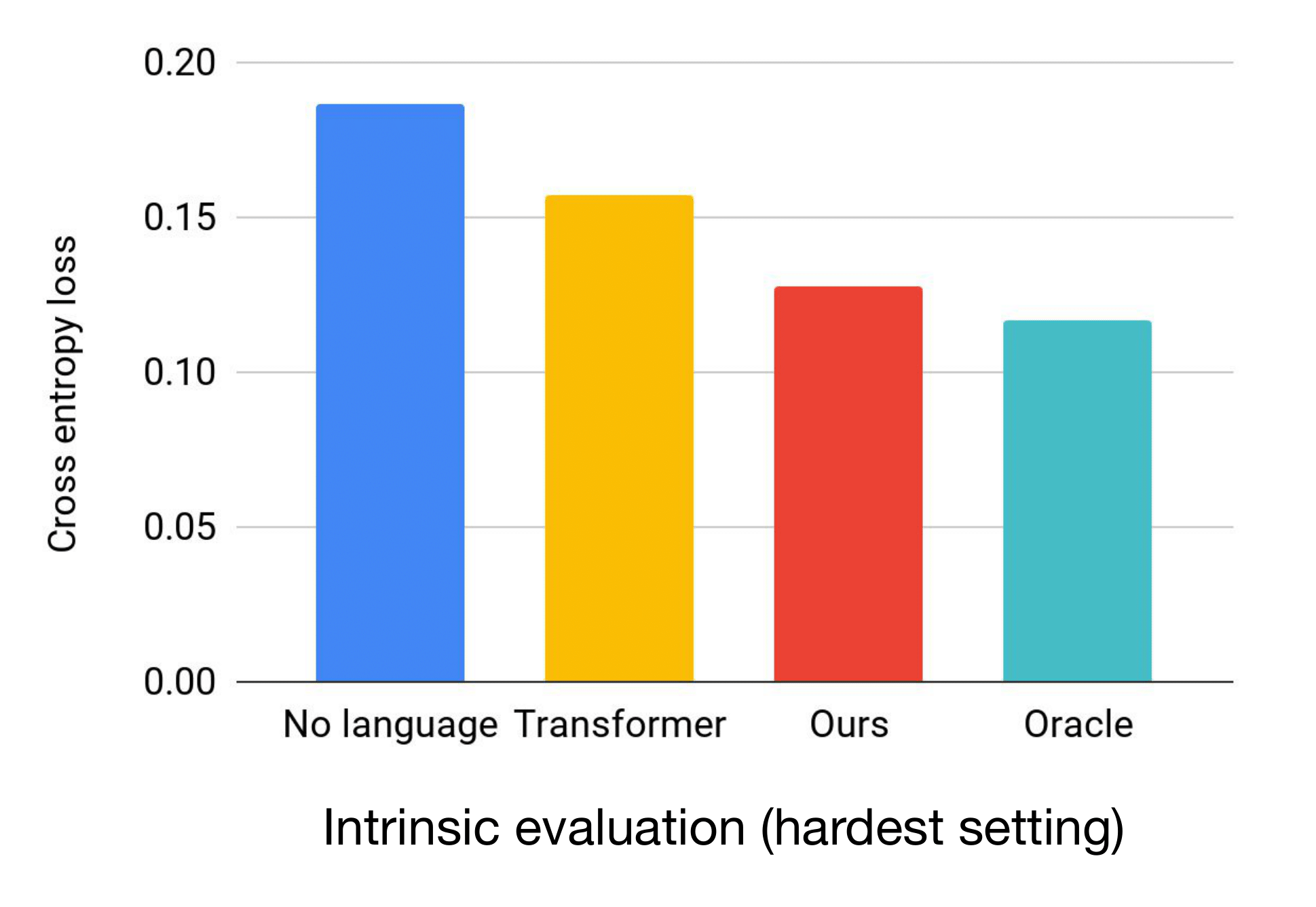

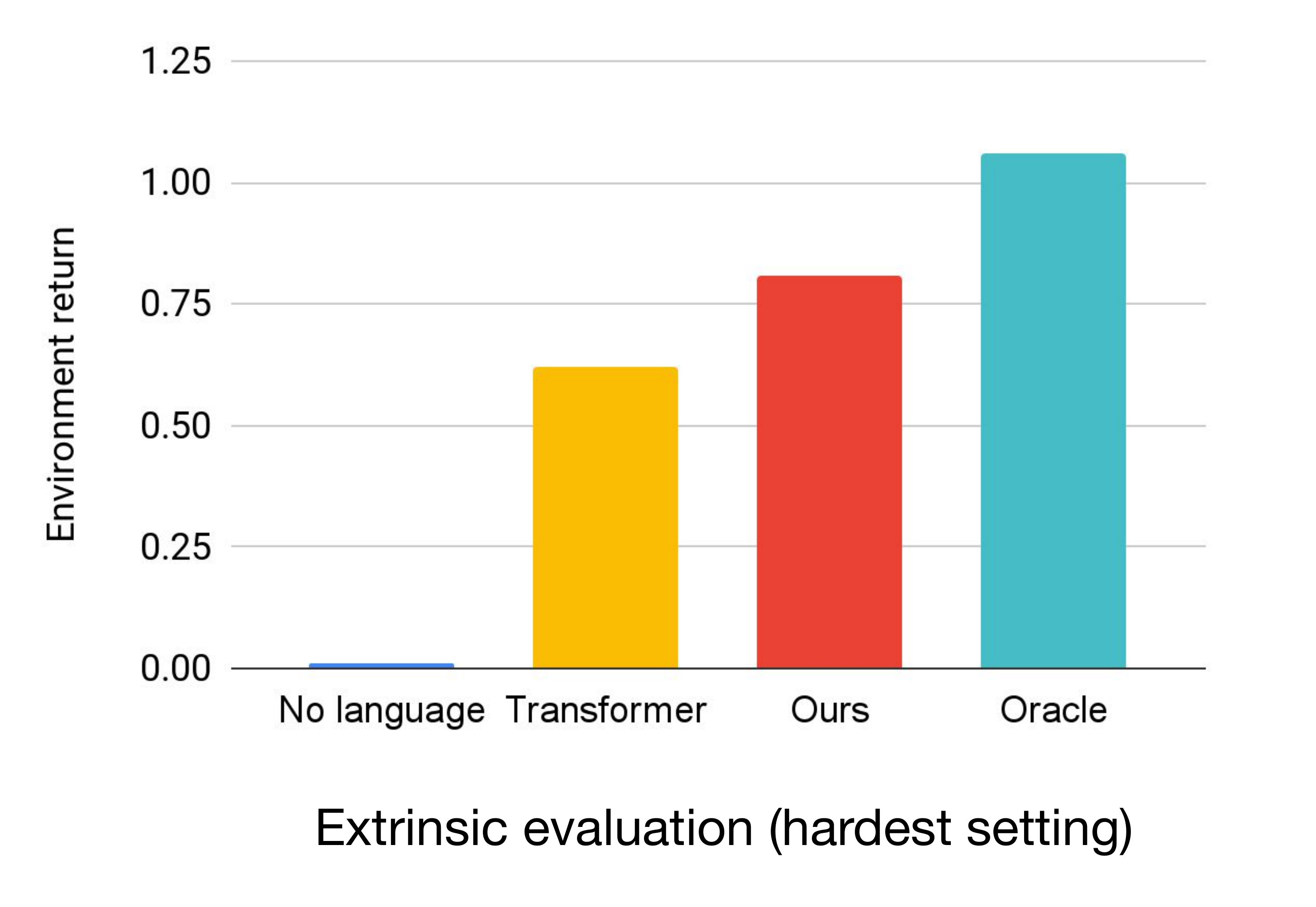

We demonstrate the effectiveness of our proposed model through both intrinsic and extrinsic evaluations. The instrinsic evaluation measures the prediction loss of the model when conditioned on ground-truth observations (we also have results with self-generated trajectories in the paper). The extrinsic evaluation simulates the scenario we describe at the top of this page, in which an agent learns from a human using its world model, without interacting with the real environment. In both evaluations, our model outperforms the standard encoder-decoder Transformer and approaches the performance of an oracle with a perfect semantic-parsing capability.

Below, we show a qualitative example taken from our hardest evaluation setting. The Observational (no language) model mistakenly captures the movement patterns of the immobile queen goal and the chasing whale message. It also misrecognizes the whale as an enemy, predicting a wrong reward after the player collides with this entity. GPTHard is an approach that leverages ChatGPT to ground descriptions to entities. It falsely identifies the queen as the message and predicts the whale to be fleeing. Meanwhile, our model (EMMA) captures all of those roles and movements accurately.

@misc{zhang2024languageguided,

title={Language-Guided World Models: A Model-Based Approach to AI Control},

author={Alex Zhang and Khanh Nguyen and Jens Tuyls and Albert Lin and Karthik Narasimhan},

year={2024},

eprint={2402.01695},

archivePrefix={arXiv},

primaryClass={cs.CL}

}